Abstract

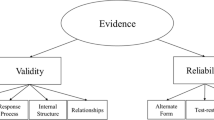

System thinking in an important area of study across STEM and non-STEM disciplines. The Earth system approach that drives the geosciences and is essential to issues of sustainability makes system thinking a critical skill in geoscience education. A key area in understanding the development of system thinking skills in the geosciences relies on the development of a tool to assess Earth system thinking skills. This paper documents the development process of the Earth system thinking concept inventory (EST CI). The instrument was administered in multiple iterations using Amazon’s Mechanical Turk (MTURK) (n = 1004). Evidence of validity and reliability was accrued using elements of both classical test theory (CTT) and item response theory (IRT). Key metrics used in this study include item difficulty, item discrimination, infit and outfit statistics, differential item functioning, item-person maps, item characteristic curves, and KR-20 statistics. By using these two approaches to validity and reliability in a complementary fashion, we were able to take an iterative approach to provide robust evidence for both validity and reliability. Additionally, the instrument is semi-customizable as language regarding feedbacks can be shifted between using “positive” and “negative” or “reinforcing” and “balancing” terminology, with the latter resulting in better reliability among a largely novice audience.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Ackoff, R. L. (1973). Science in the systems age: Beyond IE Or and MS. Operations Research, 21, 664.

Anderson, D. L., Fisher, K. M., & Norman, G. J. (2002). Development and evaluation of the conceptual inventory of natural selection. Journal of Research in Science Teaching, 39(10), 952–978.

Arnold, R. D., & Wade, J. P. (2015). A definition of systems thinking: A systems approach. Procedia Computer Science, 44, 669–678.

Arthurs, L., Hsia, J. F., & Schweinle, W. (2015). The oceanography concept inventory: A semicustomizable assessment for measuring student understanding of oceanography. Journal of Geoscience Education, 63(4), 310–322.

Bardar, E. M., Prather, E. E., Brecher, K., & Slater, T. F. (2007). Development and validation of the light and spectroscopy concept inventory. Astronomy Education Review, 5(2), 103–113.

Buhrmester, M. D., Talaifar, S., & Gosling, S. D. (2018). An evaluation of Amazon’s Mechanical Turk, its rapid rise, and its effective use. Perspectives on Psychological Science, 13(2), 149–154.

Byrne, B. M. (2016). Structural equation modeling with Amos: Basic concepts: Applications, and programming (6th ed.). Routledge.

Cappelleri, J. C., Lundy, J. J., & Hays, R. D. (2014). Overview of classical test theory and item response theory for the quantitative assessment of items in developing patient-reported outcomes measures. Clinical Therapeutics, 36(5), 648–662.

Crocker, L., & Algina, J. (2008). Introduction to Classical & Modern Test Theory. 2008. Cengage Learning, Mason, Ohio.

Ding, L., Chabay, R., Sherwood, B., & Beichner, R. (2006). Evaluating an electricity and magnetism assessment tool: Brief electricity and magnetism assessment. Physical review special Topics-Physics education research, 2(1), 010105.

Doran, R. L. (1980). Basic Measurement and Evaluation of Science Instruction. National Science Teachers Association.

Earth Science Literacy Initiative. (2009). Earth science literacy principles: The big ideas and supporting concepts of Earth science (p. 13). National Science Foundation.

Fink, A., & Litwin, M. S. (1995). How to measure survey reliability and validity (vol. 7). Sage.

Gilbert, L., Gross, D., & Kreutz, K. (2016). Systems thinking. Integrate. Retrieved from https://serc.carleton.edu/integrate/teaching_materials/syst_thinking/. Accessed 21 Oct 2021

Gilbert, L. A., Iverson, E., Kastens, K., Awad, A., McCauley, E. Q., Caulkins, J., Steer, D. N., Czajka, C. D., Mcconnell, D. A., & Manduca, C. A. (2017). Explicit focus on systems thinking in InTeGrate materials yields improved student performance. Geological Society of America Abstracts with Programs, 49(6). https://doi.org/10.1130/abs/2017AM-304783

Gilbert, L. A., Gross, D. S., & Kreutz, K. J. (2019). Developing undergraduate students' systems thinking skills with an InTeGrate module. Journal of Geoscience Education, 67(1), 34–49. https://doi.org/10.1080/10899995.2018.1529469

Grohs, J. R., Kirk, G. R., Soledad, M. M., & Knight, D. B. (2018). Assessing systems thinking: A tool to measure complex reasoning through ill-structured problems. Thinking Skills and Creativity, 28, 110–130.

Grolund, N. E. (1993). How to make achievement tests and assessments (5th ed.). Allyn & Bacon.

Hambleton, R. K., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of item response theory (Vol. 2). Sage.

Herbert, B. E. (2006). Student understanding of complex earth systems. Special PapersGeologic Society of America., 413, 95.

Hewitt, C. & Tewksbury, B. (2017). Pan-African approaches to teaching geoscience, Integrate. Retrieved Aug 19, 2021, from https://serc.carleton.edu/integrate/workshops/african-education/index.html. Accessed 21 Oct 2021

Ireton, M., Manduca, C., & Mogk, D. (1997). Shaping the future of undergraduate Earth science education: Innovation and change using an Earth systems approach. American Geophysical Union.

Jarrett, L., Ferry, B., & Takacs, G. (2012). Development and validation of a concept inventory for introductory-level climate change science. International Journal of Innovation in Science and Mathematics Education, 20(2), 25–41.

Jordan, R. C., Sorensen, A. E., & Hmelo-Silver, C. (2014). A conceptual representation to support ecological systems learning. Natural Sciences Education, 43(1), 141–146.

Kastens, K. A., Shipley, T. F., & Banerjee, R. (2020, December). Make sure your audience understands that a “positive” feedback loop is not necessarily desirable. In AGU Fall Meeting Abstracts (Vol. 2020, pp. ED034–08).

Kline, P. (2015). A handbook of test construction (psychology revivals): Introduction to psychometric design. Routledge.

Lally, D., Forbes, C. McNeal, K.S., & Soltis, N. A. (2019). National geoscience faculty survey 2016: Prevalence of systems thinking and scientific modeling learning opportunities. Journal of Geoscience Education, 67(2), 174–191.

Libarkin, J. C., & Anderson, S. W. (2005). Assessment of learning in entry-level geoscience courses: Results from the Geoscience Concept Inventory. Journal of Geoscience Education, 53(4), 394–401.

Libarkin, J. C., Gold, A. U., Harris, S. E., McNeal, K. S., & Bowles, R. P. (2018). A new, valid measure of climate change understanding: Associations with risk perception. Climatic Change, 150(3), 403–416.

Libarkin, J. (2008). Concept inventories in higher education science. In BOSE Conf.

Linacre, J. M. (1999). Understanding Rasch measurement: Estimation methods for Rasch measures. Journal of Outcome Measurement, 3, 382–405.

Linacre, J. M. (2019) Facets computer program for many-facet Rasch measurement, version 3.83.0. Beaverton, Oregon: Winsteps.com

Magno, C. (2009). Demonstrating the difference between classical test theory and item response theory using derived test data. The International Journal of Educational and Psychological Assessment, 1(1), 1–11.

Martin, S., Brannigan, J., & Hall, A. (2005). Sustainability, systems thinking and professional practice. Journal of Geography in Higher Education, 29(1), 79–89.

Mayer, V. (1991). Framework for Earth systems education. Science Activities: Classroom Projects and Curriculum Ideas, 28, 8–9.

McNeal, K. S., Libarkin, J. C., Ledley, T. S., Bardar, E., Haddad, N., Ellins, K., & Dutta, S. (2014). The role of research in online curriculum development: The case of EarthLabs climate change and Earth system modules. Journal of Geoscience Education, 62(4), 560–577.

Miller, M. B. (1995). Coefficient alpha: A basic introduction from the perspectives of classical test theory and structural equation modeling. Structural Equation Modeling: A Multidisciplinary Journal, 2(3), 255–273.

National Research Council. (2001). Grand challenges in environmental sciences. National Academies Press.

NGSS Lead States (2013). Next generation science standards: For states, by states. Available at http://www.nextgenescience.org/next-generation-science-standards. Accessed 21 Oct 2021

Nunnally, J. C. (1978). Psychometric theory. McGraw-Hill.

Oreskes, N., Shrader-Frechette, K., & Belitz, K. (1994). Verification, validation, and confirmation of numerical models in the earth sciences. Science, 263(4), 641–646.

Orion, N. (2002). An Earth systems curriculum development model. In V. J. Mayer (Ed.), Global Science Literacy (pp. 159–168). Kluwer Academic Publisher.

Orion, N., & Libarkin, J. (2014). Earth system science education. Handbook of Research on Science Education, 2, 481–496.

Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on amazon mechanical turk. Judgment and Decision Making, 5(5), 411–419.

Pierotti, R., & Wildcat, D. (2000). Traditional ecological knowledge: The third alternative (commentary). Ecological Applications, 10(5), 1333–1340.

Pituch, K. A., & Stevens, J. P. (2016). Applied multivariate statistic for the social sciences (6th ed.). Routledge.

Scherer, H. H., Holder, L., & Herbert, B. (2017). Student learning of complex Earth Systems: Conceptual frameworks of Earth Systems and instructional design. Journal of Geoscience Education, 65(4), 473–489.

Sell, K. S., Herbert, B. E., Stuessy, C., & Schielack, J. (2006). Supporting student conceptual model development of complex earth systems through the use of multiple representations and inquiry. Journal of Geoscience Education, 54, 396–407.

Sibley, D. F., Anderson, C. W., Heidemann, M., Merrill, J. E., Parker, J. M., & Szymanski, D. W. (2007). Box diagrams to assess students’ systems thinking about the rock, water and carbon cycles. Journal of Geoscience Education, 55(2), 138–146.

Solano-Flores, G., & Nelson-Barber, S. (2001). On the cultural validity of science assessments. Journal of Research in Science Teaching: The Official Journal of the National Association for Research in Science Teaching, 38(5), 553–573.

Soltis, N. A., McNeal, K. S., Forbes, C., & Lally, D. (2019). The relationship between active learning, course innovation and teaching Earth systems thinking: A structural equation modeling approach. Geosphere, 15(5), 1703–1721.

Soltis, N.A., McNeal, K.S., Schnittka, C.G., (2021). Undergraduate student conceptions of the Earth system and biogeochemical cycling. Journal of Geoscience Education.

Stillings, N. (2012). Complex systems in the geosciences and in geoscience learning. In K. Kastens & C. Manduca (Eds.), Earth and mind II: A synthesis of research on thinking and learning in the geosciences (pp. 91–96). Geological Society of America.

Stokes, A. (2011). A phenomenographic approach to investigating students’ conceptions of geoscience as an academic discipline. Geological Society of America Special Papers, 474, 23–35.

U.S. Bureau of Labor Statistics (2021). Occupational outlook handbook: Geoscientists. Available at https://www.bls.gov/ooh/life-physical-and-social-science/geoscientists.htm. (accessed 17 August, 2021).

Walker, S. L., & McNeal, K. S. (2013). Development and validation of an instrument for assessing climate change knowledge and perceptions: The climate stewardship survey (CSS). International Electronic Journal of Environmental Education, 3(1), 57–73.

Wildcat, D. R. (2013). Introduction: climate change and indigenous peoples of the USA. In Climate change and Indigenous peoples in the United States (pp. 1–7). Springer, Cham.

Williams, A., Kennedy, S., Philipp, F., & Whiteman, G. (2017). Systems thinking: A review of sustainability management research. Journal of Cleaner Production, 148, 866–881.

Wilson, M. (2005). Constructing Measures: An Item Response Modeling Approach. Lawrence Earlbaum Associates.

Wright, B. D., & Masters, G. N. (1981). Rating scale analysis. MESA Press.

Zwick, R., Thayer, D. T., & Lewis, C. (1999). An empirical Bayes approach to Mantel-Haenszel DIF analysis. Journal of Educational Measurement, 36(1), 1–28.

Acknowledgements

We would like to thank Chris Schnittka, Stephanie Shepherd, Joni Lakin, Anne Gold, Leilani Arthurs, Juliette Rooney-Varga, Lisa Gilbert, and Hannah Scherer for their insights and discussions that were invaluable in the development and revision of this instrument.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Soltis, N.A., McNeal, K.S. Development and Validation of a Concept Inventory for Earth System Thinking Skills. Journal for STEM Educ Res 5, 28–52 (2022). https://doi.org/10.1007/s41979-021-00065-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41979-021-00065-z