Teacher’s Perceptions of Using an Artificial Intelligence-Based Educational Tool for Scientific Writing

- 1Department of Teaching and Learning, University of Miami, Coral Gables, FL, United States

- 2Department of Learning Sciences, Georgia State University, Atlanta, GA, United States

Efforts have constantly been made to incorporate AI into teaching and learning; however, the successful implementation of new instructional technologies is closely related to the attitudes of the teachers who lead the lesson. Teachers’ perceptions of AI utilization have only been investigated by only few scholars due an overall lack of experience of teachers regarding how AI can be utilized in the classroom as well as no specific idea of what AI-adopted tools would be like. This study investigated how teachers perceived an AI-enhanced scaffolding system developed to support students’ scientific writing for STEM education. Results revealed that most STEM teachers positively experienced AI as a source for superior scaffolding. On the other hand, they also raised the possibility of several issues caused by using AI such as the change in the role played by the teachers in the classroom and the transparency of the decisions made by the AI system. These results can be used as a foundation for which to create guidelines for the future integration of AI with STEM education in schools, since it reports teachers’ experiences utilizing the system and various considerations regarding its implementation.

Introduction

Artificial Intelligence (AI) has been significantly changing the structure of every industry and exponentially increasing the availability of cutting-edge tools utilized in people’s everyday lives. This state-of-the-art technology has also considerably influenced educational practices, and efforts are constantly being made to incorporate AI into teaching and learning. For several decades, educators have utilized AI techniques to advance learning management systems, assessment instruments, and other learning support tools in various STEM subjects (Koedinger et al., 1997; Mitrović, 1998; D’Mello and Graesser, 2012; Hwang and Tu, 2021). One example is Carnegie Learning’s “MIKA,” a math courseware platform which analyzes students’ work, determines their optimal performance level, and then offers learners instructional content and assessment tasks that are catered to their individual performance levels (Puri and Mishra, 2020). Zhai et al. (2020) reviewed 47 studies that adopted AI algorithms (i.e., machine learning) as assessments in science education and found AI to be an effective and validated alternative for traditional science assessments. Furthermore, the remarkable development of AI algorithms has brought about research on the ways that AI can support learners’ performance of complex pedagogical tasks in STEM education, such as scientific writing through a process-oriented approach (Walker, 2019; Latifi et al., 2020; Yang, 2021).

Despite the great potentials of AI-enabled learning supports, the pervasive use of technology in education does not guarantee teachers’ ability to deploy technology in classrooms, nor does it ensure the quality of teaching (Mercader and Gairín, 2020) since teachers are not yet fully prepared to implement AI-based education (United Nations Educational, Scientific and Cultural Organization [UNESCO], 2019). Moreover, scholars have claimed that the successful implementation of new instructional technologies is closely related to the attitudes of the teachers who lead the lesson (Fernández-Batanero et al., 2021). Despite decades of professional development about educational technology integration, a great number of teachers still view the implementation of technology in the classroom negatively and are not inclined to use it (Prensky, 2008; Kaban and Ergul, 2020; Istenic et al., 2021). Instead, they continue using the same materials and teaching methodologies, rejecting the application of anything that might bring negative outcomes (Tallvid, 2016). Moreover, anxiety brought about by using new technologies can act as a burden (Zimmerman, 2006) and hinder teachers’ efforts to introduce technology on-site (Hébert et al., 2021).

In view of this, teachers need to learn not only how to use technology but also how to successfully integrate it into their curricula. Also, in order to be open to integrating advanced technology into their lessons, teachers need to understand the importance of educational technology and the affordances that it can bring to instruction. Furthermore, when it comes to AI, a great number of teachers and school officials have not yet experienced AI-based learning support and might simply recognize it as slightly more advanced educational technology. Consequently, before the successful application of an AI support system into education and an evaluation of its effectiveness, teachers should first utilize it themselves so that they can fully understand how it can scaffold STEM learning, in particular, scientific writing.

Literature Review and Theoretical Framework

Scientific Writing

Unlike general writing, scientific writing expresses scientific thinking that explores, verifies, reinforces, and improves existing knowledge, creating new knowledge (Lindsay, 2020; Grogan, 2021). In addition, scientific writing does not simply list memorized scientific knowledge, but goes through the process of constructing meaning on its own, helping students improve their scientific thinking, critical analysis reasoning, and problem-solving skills to foster scientific literacy, the ultimate goal of STEM education.

Scientific writing also emphasizes the process in which, through writing activities, learners organize what they have already learned into their perceptions. This learning strategy for writing can be seen to be in line with constructivism, emphasizing active cognition of an individual, subjective interpretations of knowledge, and collaborations (Supriyadi, 2021), which are necessary for all STEM-related subjects focused on providing solutions to current and authentic problems faced in our society. The effectiveness of scientific writing has enhanced teaching and learning strategies in STEM education, from K-12 education to post-secondary classrooms. For example, by using a web application consisting of six systematic steps to support middle and high school students’ scientific writing, students were able to improve their problem-solving skills, their deep understanding of scientific content, and their argumentation skills to perform given tasks during science lessons (Belland et al., 2019, 2020; Belland and Kim, 2021; Kim et al., 2021). In addition, 33 STEM faculty from multiple disciplines (e.g., engineering, physics, mathematics, chemistry, and biology) in higher education institutions pointed out the different roles that scientific writing plays in the practice of professional scientific knowledge, the improvement of conceptual understanding, and the understanding of how disciplinary knowledge is constructed and justified, all of which are essentials in STEM education (Moon et al., 2018).

On the other hand, because the demands of scientific writing require constant practice through the production of material and reception of feedback (Belland et al., 2020) it can become difficult for teachers to provide equal, individualized support to each of their students in the classroom.

In view of this, computer-based feedback systems have been widely used to support learner’s scientific writing, effectively offering immediate assistance to them whenever requested (Toth et al., 2002; Wiley et al., 2009; Chong and Lee, 2012; Proske et al., 2012). However, since feedback in most systems was provided uniformly without catering to the needs and difficulties of individual students, and because such feedback focused on the enhancement of students’ overall cognition rather than specific higher-order thinking skills required for scientific writing (Belland et al., 2017), a new type of support became necessary. Such support should assist students in leveraging their knowledge to bring strong evidence to a scientific writing claim for an authentic/complex learning task. AI can provide this type of support due to its many affordances in supporting STEM education.

Artificial Intelligence

Artificial intelligence (AI) has been defined as a computer program or system that has intelligence. This includes artificially implemented computer programs that have human learning, reasoning, and perceptual abilities since, as posited by Turing (1950), even machines can think like humans. Recently, the highest frequency of artificial intelligence implementation comes from machine learning algorithms, which adaptively create and utilize data-based models.

Machine learning is a computer algorithm that develops and automatically improves algorithms and techniques so that computers can learn. In almost all fields, machine learning algorithms are used in a variety of ways, ranging from pattern discovery through big data analysis, data clustering, and sequencing to highly accurate output prediction from input data. The field of education is no exception to the use of AI. In particular, AI in STEM education is widely used to support the role of teachers as learning facilitators, academic evaluators, and counselors through the analysis of education-related big data collected from students, teachers, and schools. For example, in an elementary school math class, AI instructors—developed as a result of a machine-learning data analysis of 44 human tutors—were able to conduct one-on-one tutoring while carrying out conversations that fit each particular student’s background and personal history (Cukurova et al., 2021). Another example includes Chatbot, an AI-enabled software with voice recognition technology that can provide customized learning support through various tools such as computers, mobile devices, and speakers. Amazon’s Alexa and Google Home are known examples of AI-based chatbots grafted onto a speaker. These chatbots can interact with learners in class and play an assistive role in learning, providing a platform for a new learning paradigm in disciplines such as science (Topal et al., 2021), mathematics (Laksana and Fiangga, 2022), and medicine/nursing (Chang et al., 2021). In a study with 18,700 participants enrolled in 147 middle and high schools in the United States, which analyzed the change in grades in algebra, the students who used the AI education software “MATHia” with traditional textbook showed significantly higher average scores than those who studies the algebra only with textbooks (Pane et al., 2014).

In addition, AI can also improve assessment methods in traditional classrooms by providing timely information on students’ learning progress, success, or failure, through the analysis of their learning patterns based on big data (Sánchez-Prieto et al., 2020). In view of this, AI can demonstrate and present information that would not have been accessible with previous evaluation methods: AI makes it possible to identify whether a learner has reached the correct answer while also providing the teacher with that learner’s process leading to the correct answer. In addition, AI can successfully identify learners’ psychological states (e.g., bored, frustrated, sad) and provide support catered to each particular situation.

Artificial Intelligence-Based Scaffolding in STEM Education

Although AI has demonstrated its potential as an educational tool, there are still unsolved questions about how it enables learning in a meaningful and effective manner. Before the implementation of AI in the field of education, the use of computer-based learning support systems, also known as intelligent tutoring systems (ITS), showed promise. ITS aimed to provide individualized and step-by-step tutorials through information from expert knowledge models, student models, and tutoring models in well-defined subjects such as mathematics (Holmes et al., 2019). Since some scholars have viewed ITS as the ancestor of AI in education (Paviotti et al., 2013), a review of the literature on ITS can suggest directions on how AI can be used in the educational field. ITS has been implemented in the instruction of several STEM subjects. For example, in Beal’s (2013) study, ITS used problem solving errors to estimate students’ skills in each math topic, then selected and presented problems to students, who should be able to solve them by using the integrated help resources within their zone of proximal development. Butz et al. (2006) demonstrated the effects of ITS on improving students’ engineering design skills through the provision of an expert system that evaluated students’ problem-solving trajectory and then provided additional tutoring—through interactive materials—that supported the achievement of one or more of their learning objectives.

A common point of several studies, including the aforementioned examples, is ITS’s attempt to provide scaffolding. Scaffolding has been utilized to make the learning tasks more manageable and accessible (Hmelo-Silver et al., 2007) and to help students improve their deep content knowledge and higher-order thinking skills (Belland et al., 2017). Scaffolding interventions can be delivered in several formats such as feedback, question prompts, hints, and expert modeling (Kim et al., 2018; Kim N. J. et al., 2020) similarly to what human tutors do in STEM education. The effects of each scaffolding format can vary according to different learning contexts, performance levels, STEM disciplines, and expected outcomes (Belland et al., 2017).

Providing ideal scaffolding to students should encompass (a) real-time support to extend and improve learners’ cognitive abilities during scientific writing and to satisfy students’ scientific inquiry; and (b) dynamic support that structures and problematizes scientific problem-solving (Reiser, 2004), allowing students to gain higher-order skills (e.g., argumentation) as they engage in ill-structured problem solving (Wood et al., 1976; Belland, 2014). To this end, in the context of STEM education, several types of computer-based scaffolds have been developed to cater to students’ current learning status and needs: (i) conceptual scaffolding, which provides learners with tools, hints, and/or concepts that guide them throughout their knowledge acquisition; (ii) procedural scaffolding, which guides learners on how to use the resources that are available to them; (iii) metacognitive scaffolding, which allows students to reflect on their own thinking and learning process; and (iv) strategic scaffolding, which provides learners with guidance to solve problems (Hannafin et al., 1999; Belland et al., 2020). Particularly for scientific writing, computer-based metacognitive and strategic scaffolding can be vital in the composition of texts, as such scaffolding can guide learners as they progress through the writing process. These two scaffolding types also afford the opportunity for learners to think about what rationale they should use in the writing of their claim, which can enhance reasoning skills and the articulation of thoughts (Tan, 2000).

In addition to metacognitive and strategic scaffolding, ITS has also enabled conceptual scaffolding. However, due to technical limitations, conceptual scaffolding through ITS pales in comparison to the two other types. Additionally, ITS provides most of its scaffolding based on fixed-time intervals, which are not ideal for supporting students’ self-directed learning skills and reasoning.

These issues can be addressed by the implementation of AI-adopted scaffolding, a much more advanced scaffolding system that is able to automatically provide and/or fade immediate support customized according to each student’s needs and learning progress. AI scaffolding’s advanced capabilities include algorithms for natural language processing, which allow computers to understand and interpret human language. Natural language processing algorithms process what they hear, structure the received information, and respond in a language the user understands (McFarland, 2016; Maderer, 2017; Kim et al., 2021). In view of this, they can be applicable as a suitable learning support for scientific writing in STEM education.

Because scientific writing requires students’ advanced argumentation skills and information literacy, expert modeling becomes key for its successful development. Research investigating the effects of scaffolding in STEM education has revealed that expert modeling showed the highest effect sizes among other formats of scaffolding (Kim et al., 2018, 2021). Expert modeling shows learners how to distinguish between valuable and futile information to use as evidence and guides them on how to build claims based on such evidence. Moreover, the learning experience supported by expert modeling can activate a learner’s declarative and procedural memory modules, which in turn can improve their ability to apply their existing knowledge to perform given tasks (Anderson et al., 1997; Papathomas, 2016).

Teachers’ Perception of Using Artificial Intelligence

As previously discussed, AI implementation in the classroom has not been fully accepted due to the great number of teachers who still view technology negatively and prefer not to utilize it (Prensky, 2008; Kaban and Ergul, 2020; Istenic et al., 2021). Reasons include teacher anxiety about using new technologies (Zimmerman, 2006), and their preference to stay in their comfort zone, using the same materials and methodologies they are already familiar with (Tallvid, 2016) and hindering efforts to introduce technology on-site (Hébert et al., 2021).

Research examining educators’ overall perception of AI revealed that in the past, they had been greatly influenced by the concept of AI disseminated through the media and science fiction, which caused them to consider AI to be an occupational threat that would replace their jobs rather than be used to support the enhancement of learning and instruction (Luckin et al., 2016). However, recent studies have contributed to raising teachers’ expectations for significant changes in the educational field such as the implementation of AI in different educational settings (Panigrahi, 2020). In light of this, a new concept has been introduced: Artificial Intelligence in Education (AIED), involving all aspects of educational uses of AI (Roll and Wylie, 2016; Hrastinski et al., 2019; Petersen and Batchelor, 2019). Teachers’ perceptions of AIED systems vary according to their pedagogical belief, teaching experience, prior experience using educational technology, and the effectiveness and necessity of a particular technology, all of which can influence their willingness to adopt new educational technology (Gilakjani et al., 2013; Ryu and Han, 2018).

Several studies investigating teachers’ perception of AIED revealed that they commonly expected AI to be able to (a) provide a more effective teaching and learning process through digitalized learning material and multimodal human-computer interactions (Jia et al., 2020); and (b) resolve various learning difficulties each student has, catering to their needs in spite of large class sizes (Heffernan and Heffernan, 2014; Holmes et al., 2019). Moreover, research has shown the hope for AIED to significantly reduce teachers’ administrative workload by taking over simple and repetitive tasks (Qin et al., 2020).

Despite these educators’ positive expectations of AIED, researchers have indicated that before adopting AI in the classroom, teachers first need to learn how to use technology and, most importantly, how to successfully integrate it into their curricula. They also need to understand the importance of AI and the affordances that it can bring to instruction so that they are open to integrating advanced technology into their lessons. Additionally, a great number of teachers and school officials have not yet experienced AI-based learning support and might simply recognize it as slightly more advanced educational technology, which can underestimate the AI’s role in the classroom. Consequently, before a successful application of an AI support system into education, it becomes necessary for teachers to first utilize it themselves so that they can fully understand how it can scaffold learning.

To this end, this study aimed to examine teachers’ perceptions of the application of AI in the classroom, more specifically through an AI-based scaffolding system for scientific writing developed by the researchers.

The study addressed the following research questions:

1) How do teachers perceive AI-based scaffolding for scientific writing?

2) What can be potential issues of AI utilization in the classroom from teachers’ perspectives?

Materials and Methods

Participants and Setting

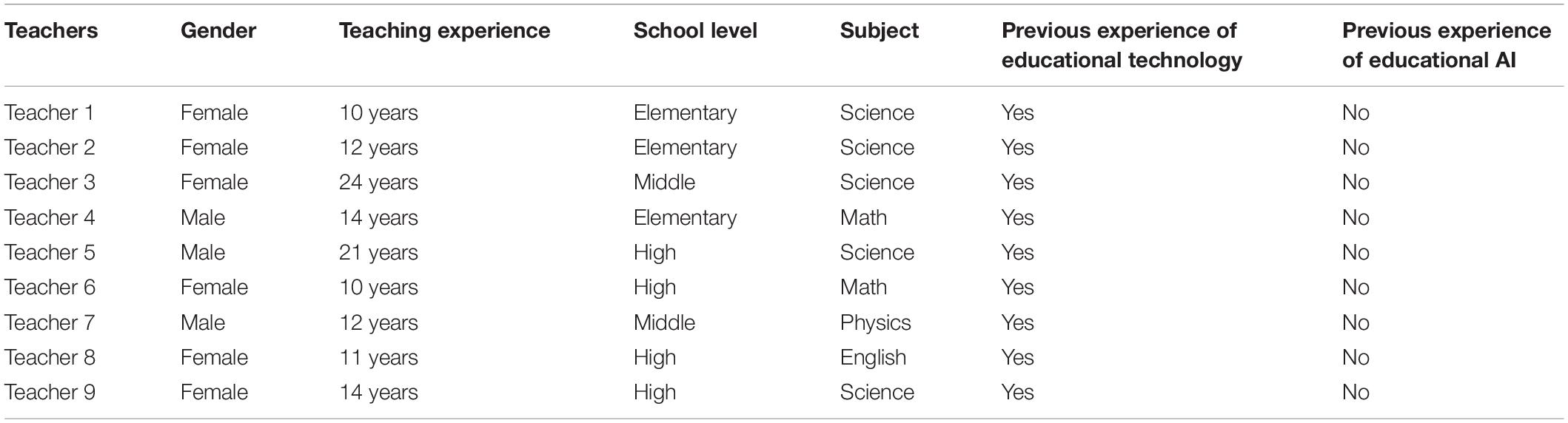

We conducted this study in a higher education institution located in the southeast region of the United States. All participants were teachers in STEM-related disciplines in K-12 schools except for one teacher who recently changed the subject from Science to English by the request of the school, and had at least 10 years of teaching experience; however, none of them had used AI-based scaffolding in their classes before. At the time data were collected, the participants were pursuing a doctoral degree and taking part in one online course offered in the doctoral program. In this particular course, participants learned design principles for formal learning environments. Table 1 shows the participating teachers’ background information.

Artificial Intelligence-Enhanced Scaffolding System

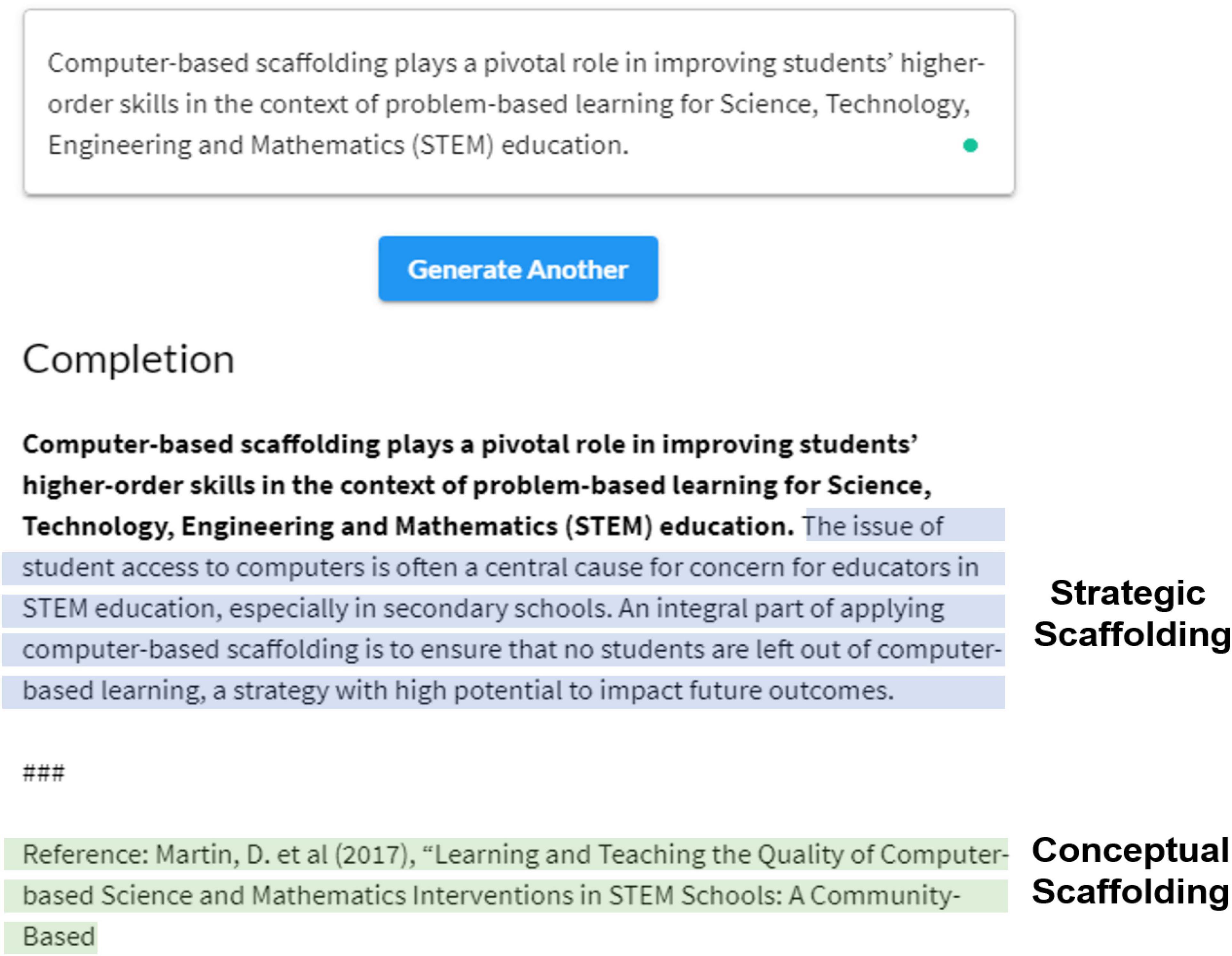

To allow teachers to experience how AI-based scaffolding supported scientific writing, this study created an artificial intelligence-enhanced scaffolding system (AISS)1 that catered to the academic writing process, focusing on argumentation support. The AI system utilized GPT-2, an open-source machine learning algorithm developed by OpenAI.2 This powerful algorithm was trained on a large corpus of data (in the millions) and can: (a) create coherent and cohesive paragraphs of text, including citations, based on input provided by the user. Such input can consist simply of keywords or be the length of a paragraph; (b) perform text completion, reading comprehension, and machine translation at state-of-the-art levels, on par with a variety of benchmarks for assessing language production, without the need for task-specific training (Radford et al., 2019). In this sense, GPT-2’s text completion served as expert modeling, providing users with conceptual scaffolding (i.e., completing text with citations of scholarly work according to the topic provided by users) as well as strategic scaffolding (i.e., helping the user problem-solve by giving examples or complementing a chosen topic) as shown in Figure 1.

Context

In weeks 1 and 2 of the course, most participating teachers were not familiar with AI integration to teaching and learning. During the t weeks before experiencing AISS, teachers were instructed to read several pieces of literature related to the potential of AIED. The literature included both examples of AI in STEM education and the pros and cons of existing educational technology tools. Teachers also had a chance to participate in a demonstration of different type of AI-based learning systems, in the context of science education, that were developed by researchers for another study (Kim et al., 2021). Through this activity, teachers could understand how AI could play a role as scaffolding to support students’ scientific problem-solving. In addition, teachers created discussion board posts to share their opinions about the application and integration of AI to their STEM-related education and to respond to at least two posts of their classmates based on weeks 1 and 2 activities.

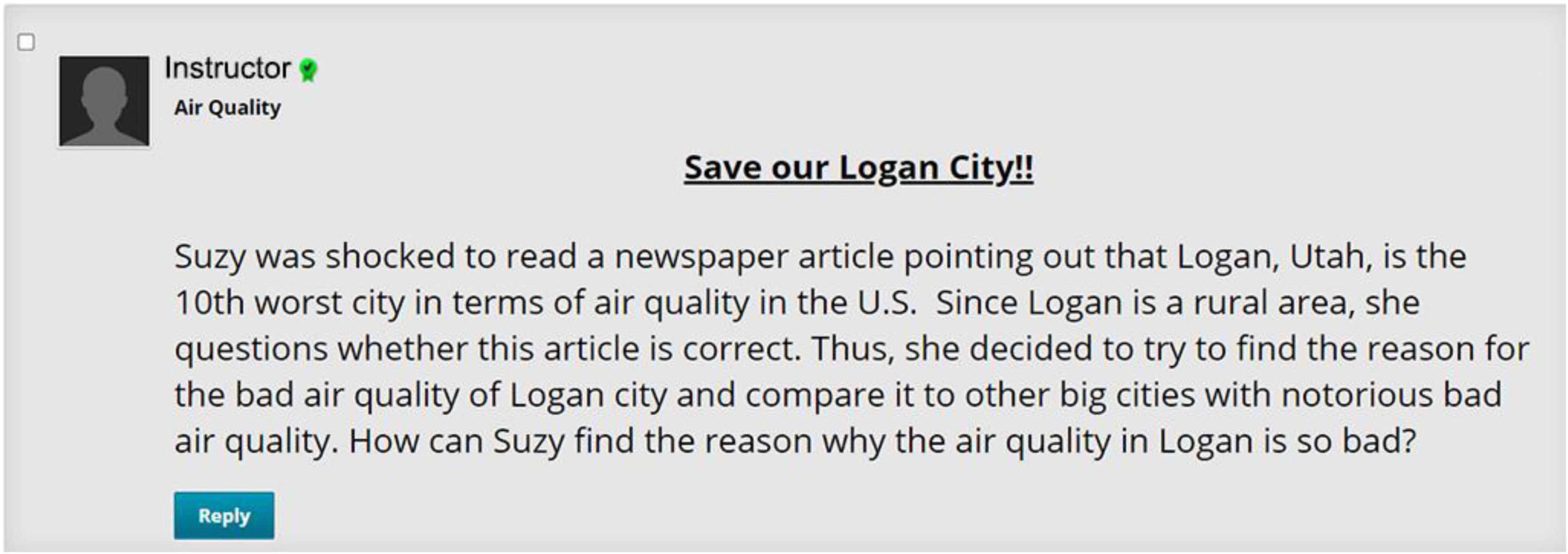

In week 3 of the course, teachers were asked to interact with the AISS scaffolding system and assess it from the standpoint of students. They then shared their opinions about the perceived advantages and disadvantages of the tool. For this activity, participants followed the sequential activities provided in the AISS to write one scientific essay. Before starting to write, they received an ill-structured/authentic task related to an issue about air quality in the United States (please see Figure 2) and were given 30 min to search for relevant information to support their problem-solving.

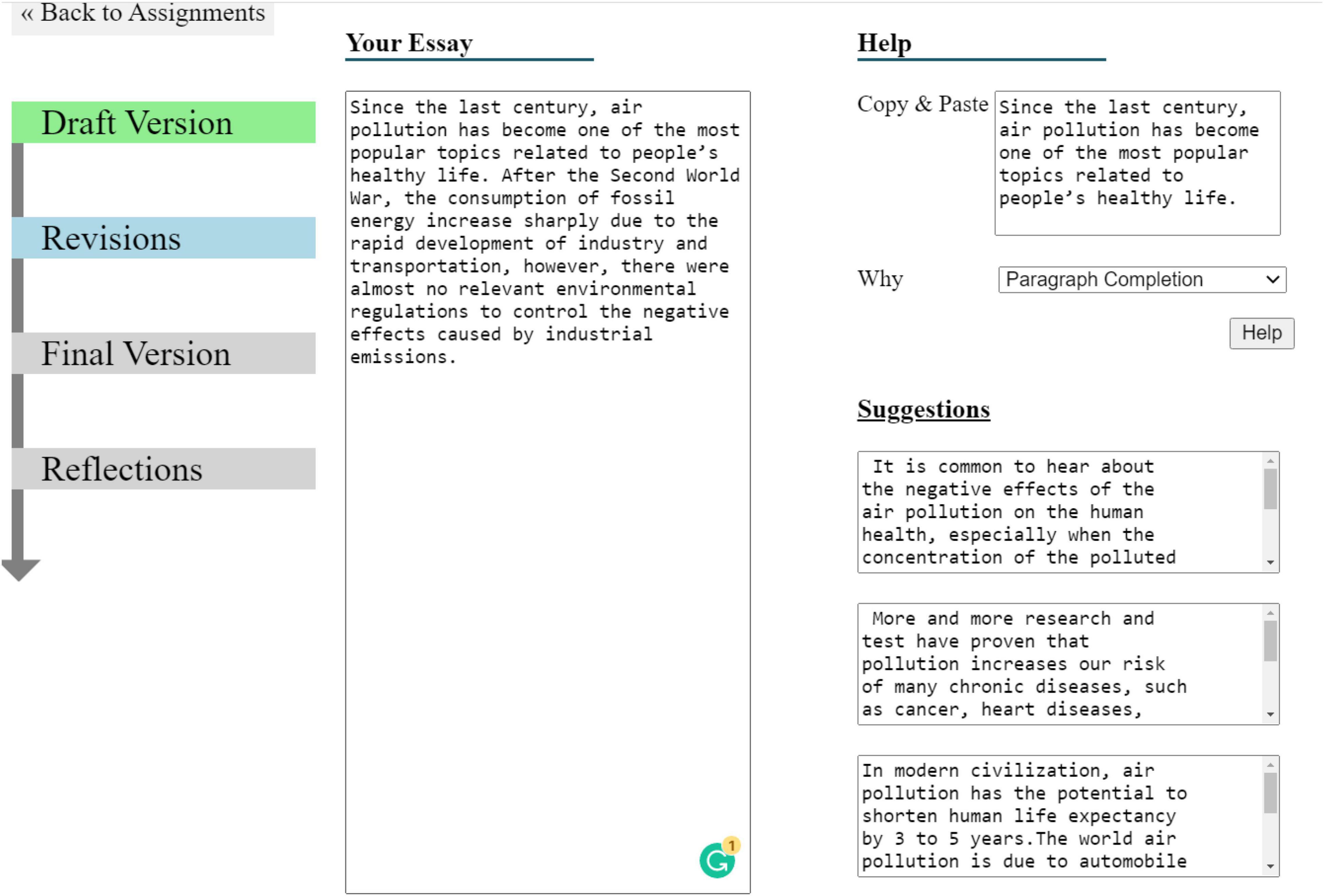

After their initial search, they were asked to create a draft version of the essay without support (i.e., on their own, a maximum of 200 words, no citation needed). Next, participants selected an initial sentence (often their topic sentence) and let the AISS generate the following sentences or paragraphs based on their input. Finally, they took notes in their revision log and reflected on what changes were made based on the AISS scaffolding and what they learned from the AI-based essay writing support (please see Figure 3).

Data Collection

Log Files

Log files included (i) time spent from initial log in to the time they logged out; (ii) what they wrote in response to the authentic topic provided to them; and (iii) the expert modeling scaffolding generated by the AISS system. These log files were used to triangulate each participant’s interview, contributing to the trustworthiness of the interpretation of the data.

Interviews

All participating teachers engaged in 10-min interviews after the completion of the writing task, focusing on (i) how they used the AI-based scaffolding; (ii) what they learned from the experience; (iii) the pros and cons of the AI-based scaffolding; and (iv) its affordances as an educational tool. All interviews were transcribed as a set of texts for analysis.

Data Analysis

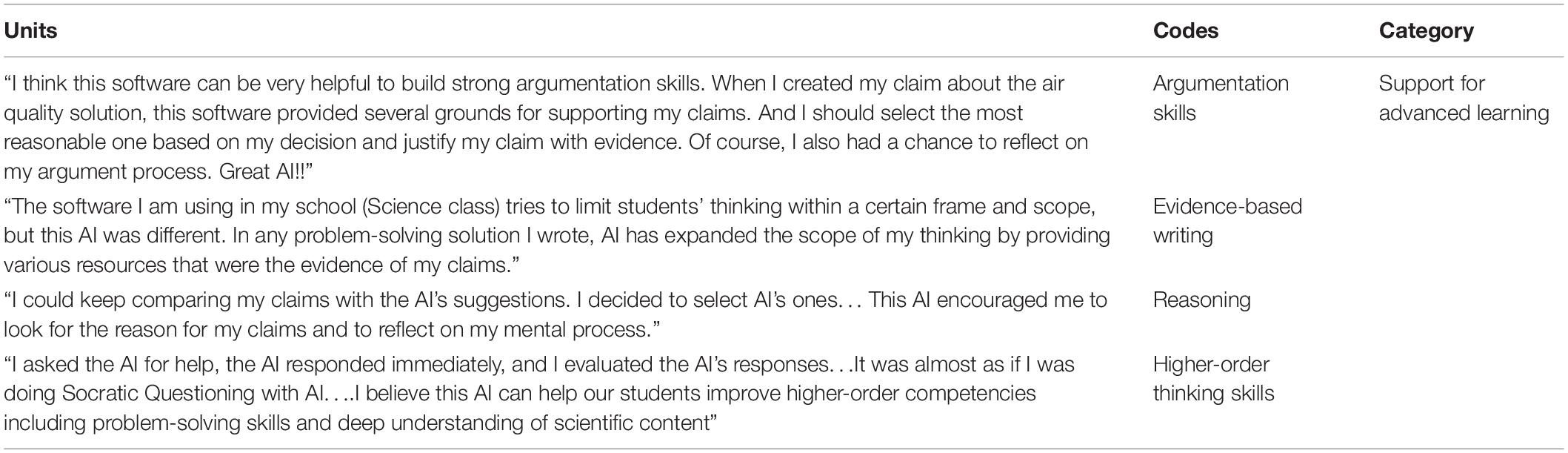

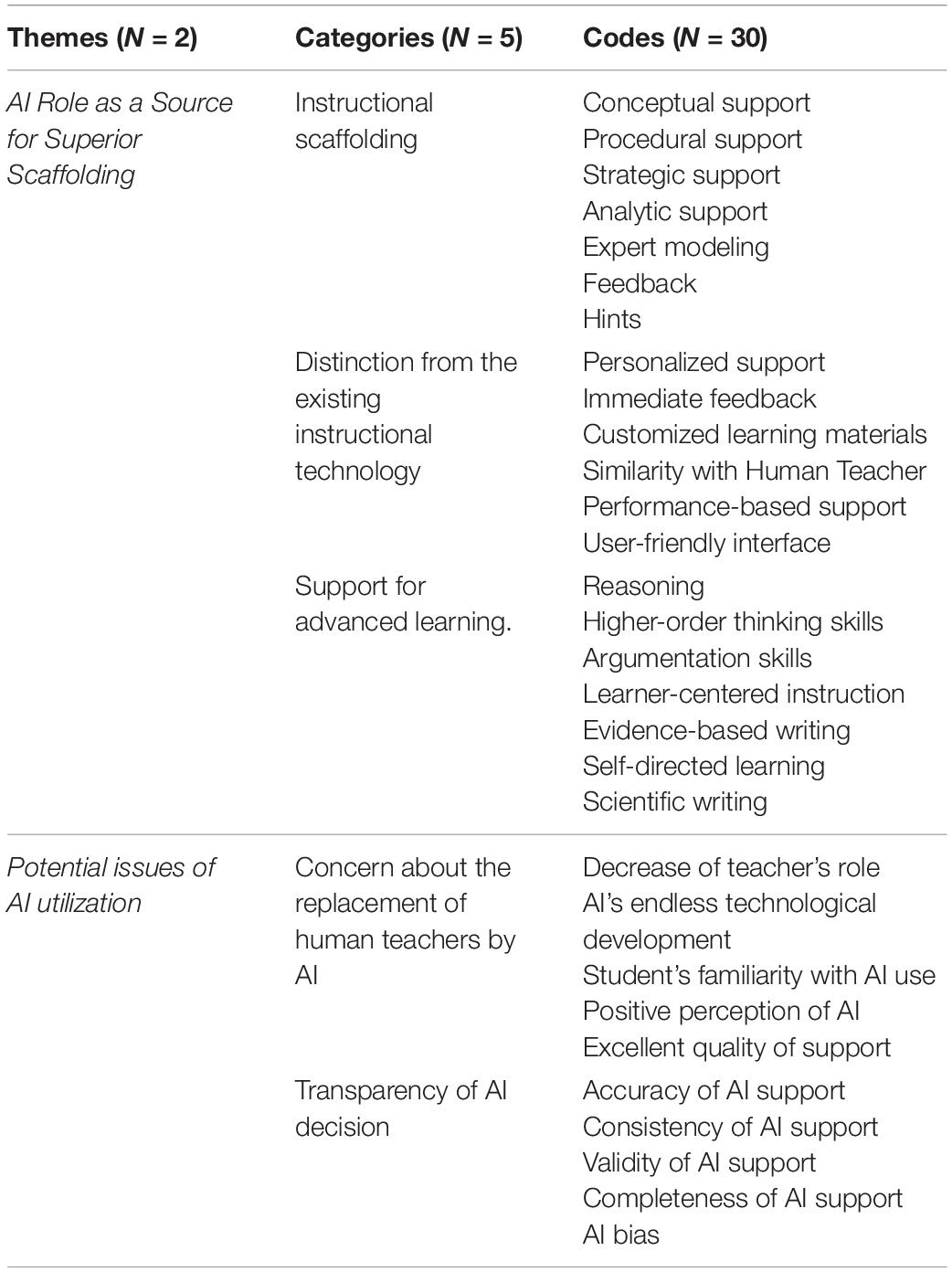

Leveraging qualitative data, a thematic case analysis was conducted. The thematic analysis aimed to identify the patterns and links between the themes extracted from the qualitative data that would address the research questions (Braun and Clarke, 2006). The analysis consisted of five steps. During the first phase, researchers read the transcribed interview repeatedly and got familiar with its content. Secondly, initial codes (N = 32) were created for units of words or phrases that were relevant to the research questions. After a careful review of the codes and all codes were categorized and collated to identify significant patterns of meaning. These grouped codes culminated in a set of categories. Table 2 shows the examples of codes related to one category of “support for advanced learning.”

The categories were then finalized through a refined process that either split, integrated, or discarded them. After all the categories were classified and named, a total of five categories were derived and separated into two separate themes. AI as a source for superior scaffolding was the first theme, which consisted of three categories. The remaining two categories fit the theme of potential issues of AI utilization (see Table 3).

The qualitative data analysis was validated by a consensus of the two coders and showed high inter-rater reliability between these two coders (Krippendorff’s alpha = 0.93), which is above the minimally acceptable level (α = 0.667, Krippendorff, 2004).

Results

Artificial Intelligence Role as a Source for Superior Scaffolding

Most teachers responded positively to the use of the AISS for learning in that the system was able to act as an expert model, providing qualified examples of scientific writing, valuable resources to make users’ claims stronger, and immediate individualized feedback.

Teacher 1: The sentences were generated in under forty-5 s, which was impressive. Moreover, the sentences the generator provides are well written, which makes me want to pay more attention to better sentence structure. The paragraphs created by the system were an exemplary model.

Teacher 2: When I used the AISS software, I was pleasantly surprised! … after I wrote about content from one book, it gave me the following result, which I thought was pretty good, although I don’t know the phrase “Pre-Advisory and Technical Development.”

Teacher 4: One great feature was the reflection piece, where one has to answer questions about the AI feedback and think about how the writing should be revised. I don’t subscribe to the idea that students will no longer write essays because of this software. I do think that it would be best served as an analytical tool for learning how to write strong paragraphs and sentences.

Beyond simply giving students a uniform example to follow and imitate, the AISS gave each user an individualized expert model directly based on their own writing. The suggestions provided by the AISS played the role of scaffolding on how to complete their writing logically and systemically in a manner that was consistent with each user’s lexical and grammatical choices, which can improve learners’ creative thinking, problem-solving skill, and its application in STEM-related real-life problems. The AISS also showed how the claims for problem-solving in writing could be supported by strong evidence.

In addition, some teachers made comparisons between the AI-based scaffolding and existing educational technology tools due to their previous negative experience and/or impression with educational technology used in STEM education. This comparison enabled teachers themselves to understand the effects and possibility of AI-adopted learning support system, which greatly differs from existing educational technology tools.

Teacher 6: The educational technology I have experienced so far (in my math class) has largely remained at the level of providing a mechanical response based on the correctness of the student’s answer. However, this program was different. It was like the real AI robots I saw in an SF movie.

Teacher 7: When I first used this program, this reminded me of quite a bit of Grammarly because it also gives suggestions to improve the spelling of words or rewriting some phrases. However, this AI is more than that. Beyond the correction of my writing, this AI played the role of my own human writing tutor. Amazing.

Some teachers claimed that this kind of support would be more beneficial to middle or high school students than to younger students, since the former have a better understanding and more experience with scientific writing. Furthermore, the AISS can be useful for a learner-centered instructional model in STEM education, where the enhancement of students’ advanced self-directed learning, and problem-solving skills are needed.

Teacher 8: I think this would be an incredibly effective tool for students 8th–12th to learn about sentence structures and sequencing of paragraphs. For example, any teacher can review with students what sentence follows the topic sentence and why. Or, a group can review why they chose one sentence over another to logically follow the next.

Teacher 9: I do see the potential of this program. I think that this program can be very useful in problem-based learning for my science class. If this software can provide support whenever students have trouble connecting claims with strong evidence, it can be extremely beneficial to students. I can picture using this type of technology with middle and high school students to help them reflect and improve their writing.

Potential Issues of Artificial Intelligence Utilization

There were many opinions regarding the fact that the AISS assumed an intermediary position (i.e., going between teachers and students), and these teachers felt their roles in the learning process would be reduced to those of class assistants and/or supervisors. As a result, teachers explained that they needed to think about what role they would assume, differently from the AISS.

Teacher 3: I was very skeptical about new technology in my classroom and I never thought AI could replace a human teacher. But I think this AI is quite amazing and has excellent potential. It is a great resource to have! However, I am also worried that the introduction of AI will gradually reduce our role in the classroom. What should we do? Should we support AI?

Teachers also raised the possibility of several issues caused by using the AISS. For example, they claimed that the system should be able to explain its decisions. They felt that they should know that “when A is entered into the AISS, B comes out.” At this time, the outcomes from the AISS should be within human comprehension. In other words, decisions made by the system and human teachers in the same context should be identical.

Teacher 5: It’s so amazing. I think I can use it in my class really well. One problem is that I can’t predict the completed sentences that this program generates. So, I also need time to review whether the sentences by this program are good models for students. But students will never wait for me. And it would be great if the AI added the explanation about how to create the sentences, in case we wanted it. Of course, this explanation should be reasonable from my perspective.

This teacher also added one more comment that users should be notified that the result came from the AISS, not from a teacher, to avoid unconditional faith in AI’s support or product. This demonstrates teachers’ remaining doubts regarding the validity of the outcomes generate by the system.

Discussion

In recent years, there has been a push for pedagogical change to benefit future generations through the implementation of cutting-edge educational technology and student-centered learning. Globally, this growing expectation has the potential to improve students’ performance, and increase their interest and motivation toward learning. In the United States, educational transformation through the utilization of AI is being emphasized in the field of STEM education (Sperling and Lickerman, 2012; Vachovsky et al., 2016; Sakulkueakulsuk et al., 2018; Branchetti et al., 2019). The purpose of AI education is to cultivate students’ thinking, creativity, problem-solving, and collaboration skills by applying STEM education elements to all educational activities using AI technology (Lin et al., 2021). For this purpose, the efforts and capacity of teachers to lead AI education are essential. Reflecting this, in a recent National Science Foundation funded workshop on Artificial Intelligence and the future of STEM, most participants agreed on the importance of integrating AI into STEM education (Tucker et al., 2020). For AI to be successfully integrated into STEM education, it is necessary for the roles and relationships between students and teachers to be redefined and for educators to be fully trained on best practices of using AI pedagogical techniques (Tucker et al., 2020; Yurtseven Avci et al., 2020). A significant challenge to solve before the successful integration of AI in STEM education is teacher’s perception of the uses and limitations of educational technology in their classroom. Despite AI’s great capabilities to overcome many limitations of the existing educational tools (that is, utilizing simple technology), there is still a trend among educators to hold negative impressions on educational technology. By changing teachers’ current negative perceptions of educational technology, the acceptance of AI as a new type of educational tool and its implementation in schools is possible. In this study, teachers personally interacted with the AI-based educational tool before its implementation in schools. They experienced first-hand the potential of this scaffolding system to support complex learning, and commented on factors that, in their opinions, should be considered for the effective and efficient application of this tool in STEM education. A summary of teachers’ opinions regarding the advantages and opportunities for performance improvement is included next.

Perception Changes

Upon examining the participants’ change in perception regarding the use and implementation of AI-based educational tools, it was confirmed that the “hands-on” experience with the tools increased their awareness of what this technology can do and receptiveness to its possible future adaption in schools. Although there were individual differences in the degree of change in perception, the biggest change occurred in teachers with less teaching experience (suggesting their younger age). The younger generation of teachers, who have more experience with educational technology as both educators and as students, is more interested in exploring new digital technology and potentially incorporating technology into their lessons. This result corroborates similar research studies investigating young teachers’ preference for the use of educational technology (Semerci and Aydin, 2018; Trujillo-Torres et al., 2020). Another possible reason for changing attitudes toward AI in education may be teachers’ familiarity with AI in their daily lives. For example, AI is now built into smartphones (e.g., Google Assistant and Siri) and other devices (Alexa and Google home) to continuously improve the functionality and efficiency of our lives. Many people also have experience with self-driving cars that are operated by AI algorithms. AI is automatically filtering our spam emails with very high accuracy every day. Experience with AI in any of these contexts may reduce teachers’ reluctance to use AI for educational purposes. Within the context of this study, having these experiences may have positively influenced teachers’ perception of a new educational technology tool. This claim is supported by Wood et al. (2005)’s study demonstrating that teachers’ familiarity and comfort with technology can lead to greater integration of technology in the classroom. This can potentially be especially beneficial to current and future cohorts of students who have grown up as “digital natives” with very early familiarity with educational technology (Tshuma, 2021). These students have no resistance to the use of new technologies and AI for educational purpose (Dai et al., 2020; Kim J. et al., 2020). In other words, AI adaptations in STEM education can be a novel solution in situations where it has been very difficult to enhance and/or maintain students’ motivation, interest, and engagement in learning.

Artificial Intelligence Scaffolding for Scientific Writing in STEM Education

This study posits that the combination of three concepts (i.e., Computer-based scaffolding, AI, and scientific writing) can improve the quality of STEM education and change teachers’ perceptions toward using educational technology in their STEM-related classrooms. Several existing studies have demonstrated that computer-based scaffolding is effective in improving students’ advanced problem-solving and higher-order thinking skills in STEM education (González-Gómez and Jeong, 2019; Kim et al., 2021; Saputri, 2021). This is because computer-based scaffolding of cognitive, metacognitive, and strategic supports can address students’ needs and learning difficulties during the learning process of solving the ill-structured/authentic STEM-related tasks (Belland et al., 2017). Furthermore, AI-based learning support systems can serve as advanced versions of computer-based scaffolding. For example, AI can determine the provision and fading of more accurate individualized and optimally timed scaffolding through data-driven decisions in addition to other commonly utilized roles of computer-based scaffolding (Doo et al., 2020; Spain et al., 2021).

As the goal of STEM education has changed from acquiring knowledge to emphasizing the process of knowledge construction, scientific writing is emerging as a powerful learning strategy. Scientific writing provides students with opportunities to figure out what they already knew and what they need to know through the reflection, clarification, and articulation of their thinking (Jeon et al., 2021), and requires students to engage in higher order thinking. In this sense, the trial in this research combines an AI-basedadvanced technology tool, scaffolding support, and scientific writing as teaching and learning strategy into a steppingstone to full integration of AI as a learning support to STEM education.

Results from this study suggest that all participating STEM teachers agreed that AI could fully serve as scaffolding for students’ scientific writing to solve the given scientific problem in online learning environments. When interacting with the system, teachers were prompted to actively search for and utilize online resources for problem-solving, which, in their opinion, made the writing process very interesting. Unlike previous computer-based supports, the expert models generated provided them with individualized support that was based on the input they provided. That is, the AI-generated writing scaffolds were in line with each individual’s writing skills and knowledge levels, allowing them to play a pivotal role in guiding logical thinking, reasoning, and argumentation skills—pre-requisites for scientific writing (Belland and Kim, 2021). On the other hand, due to the need for more advanced reasoning and problem-solving skills for the successful completion of scientific writing, teachers commented on its better suitability for middle and high school students rather than younger children. In fact, scholars have predicted an explosive demand for AI-based scaffolding in secondary and/or post-secondary STEM education in the near future (Maderer, 2017; Becker et al., 2018; Metz and Satariano, 2018; Doo et al., 2021).

Artificial Intelligence Concerns and Future Directions

Some of the participants were especially concerned about the roles of AI when utilized in support for learning. They feared that the AI would reduce their role to assistants and they also questioned the accuracy and reliability of the information generated by the system. On the other hand, the fast pace in which changes are taking place in the overall educational environment is likely to continue in the future, and some teachers have acknowledged this fact. They have suggested future directions so that teachers and AI can coexist, which include familiarity with AI-enabled scaffolding and an awareness of how the technology can be integrated into instructional settings. Additionally, teachers in this study argued in favor of receiving further professional development regarding the use of AI, which in turn can enhance their careers and ease typical concerns with using this technology. This corroborates scholars’ calls for more frequent technology-supported pedagogical professional development (Ertmer et al., 2012; Hao and Lee, 2015; Froemming and Cifuentes, 2020).

Limitation

AISS for scientific writing in this study was developed with the main purpose of understanding STEM teachers’ general perception of using AI. The topic of the task given for the use of AISS was limited to the context of science education generally. In other words, the specificity of the various subjects of STEM education was not reflected. Specifically revealing the advantages and disadvantages of this AI software when it could be used in several other STEM related disciplines was beyond the scope of this study. This leads to difficulties providing specific suggestions on how AISS can be practically utilized in each STEM discipline in ways that reflect each discipline’s own unique nature and learning process. Therefore, future research is needed to develop the tasks for each STEM subject (i.e., Science, Technology, Engineering, and Mathematics). In other words, the integrated STEM-related tasks reflecting the specific curriculum of each subject so that teachers can utilize this AI-program for scientific writing still need to be developed.

Conclusion

As a result of the unprecedented Corona virus crisis, there has been a huge shift in education as a result of K-12 schools and universities around the world being forced to close to promote health and safety. Online learning was adopted almost overnight with alternative methods, leading to a full-fledged discussion on the use of AI in the education field much faster than expected. However, as always, the successful implementation of new instructional technologies is closely related to the attitudes of the teachers who lead the lesson. Nevertheless, teachers’ perceptions of AI utilization have been investigated by only few scholars due to an overall lack of experience within the teaching field with AI utilization in the classroom. Most teachers simply have no specific idea of what AI-adopted tools would be like.

In this regard, this study brings great significance to the field in revealing STEM teachers’ overall positive perception regarding this innovative AI-based scaffolding and opportunities for future improvements. In addition, results of teachers’ experiences using the systems and their considerations of its implementation from this study can be used as a foundation for developing guidelines for the future integration of AI into school curricula, particularly in STEM education.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Ethics Statement

The studies involving human participants were reviewed and approved by the Institution Review Board, University of Miami. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

NK designed and implemented the research. Both authors contributed to analyzing the data, developing the tool, and writing the manuscript and approved the final version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

References

Anderson, J. R., Matessa, M., and Lebiere, C. (1997). ACT-R: a theory of higher level cognition and its relation to visual attention. Hum. Comput. Interact. 12, 439–462. doi: 10.1207/s15327051hci1204_5

Beal, C. R. (2013). “AnimalWatch: An intelligent tutoring system for algebra readiness,” in International Handbook of Metacognition and Learning Technologies, eds R. Azevedo and V. Aleven (New York: Springer), 337–348. doi: 10.1007/978-1-4419-5546-3_22

Becker, S. A., Brown, M., Dahlstrom, E., Davis, A., DePaul, K., Diaz, V., et al. (2018). NMC Horizon Report: 2018 Higher Education Edition. Louisville: Educause.

Belland, B. R. (2014). “Scaffolding: Definition, current debates, and future directions,” in Handbook of Research on Educational Communications and Technology, eds J. Spector, M. Merrill, J. Elen, and M. Bishop (Springer: New York), 505–518. doi: 10.1007/978-1-4614-3185-5_39

Belland, B. R., and Kim, N. J. (2021). Predicting high school students’ argumentation skill using information literacy and trace data. J. Educ. Res. 114, 211–221. doi: 10.1080/00220671.2021.1897967

Belland, B. R., Gu, J., Kim, N. J., Jaden Turner, D., and Mark Weiss, D. (2019). Exploring epistemological approaches and beliefs of middle school students in problem-based learning. J. Educ. Res. 112, 643–655. doi: 10.1080/00220671.2019.1650701

Belland, B. R., Walker, A. E., Kim, N. J., and Lefler, M. (2017). Synthesizing results from empirical research on computer-based scaffolding in STEM education: a meta-analysis. Rev. Educ. Res. 87, 309–344. doi: 10.3102/0034654316670999

Belland, B. R., Weiss, D. M., and Kim, N. J. (2020). High school students’ agentic responses to modeling during problem-based learning. J. Educ. Res. 113, 374–383. doi: 10.1080/00220671.2020.1838407

Branchetti, L., Levrini, O., Barelli, E., Lodi, M., Ravaioli, G., Rigotti, L., et al. (2019). “STEM analysis of a module on Artificial Intelligence for high school students designed within the I SEE Erasmus+ Project,” in Eleventh Congress of the European Society for Research in Mathematics Education, (Utrecht: Freudenthal Institute).

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi: 10.1191/1478088706qp063oa

Butz, B. P., Duarte, M., and Miller, S. M. (2006). An intelligent tutoring system for circuit analysis. IEEE Trans. Educ. 49, 216–223. doi: 10.1109/TE.2006.872407

Chang, C. Y., Hwang, G. J., and Gau, M. L. (2021). Promoting students’ learning achievement and self-efficacy: a mobile chatbot approach for nursing training. Br. J. Educ. Technol. 53, 171–188. doi: 10.1111/bjet.13158

Chong, S. X., and Lee, C.-S. (2012). Developing a Pedagogical-Technical Framework to Improve Creative Writing. Educ. Technol. Res. Dev. 60, 639–657. doi: 10.1007/s11423-012-9242-9

Cukurova, M., Khan-Galaria, M., Millán, E., and Luckin, R. (2021). A Learning Analytics Approach to Monitoring the Quality of Online One-to-one Tutoring. J. Learn. Anal. 1, 1–10. doi: 10.35542/osf.io/qfh7z

Dai, Y., Chai, C. S., Lin, P. Y., Jong, M. S. Y., Guo, Y., and Qin, J. (2020). Promoting students’ well-being by developing their readiness for the artificial intelligence age. Sustainability 12:6597. doi: 10.3389/fdgth.2021.739327

D’Mello, S., and Graesser, A. (2012). Dynamics of affective states during complex learning. Learn. Instr. 22, 145–157. doi: 10.1016/j.learninstruc.2011.10.001

Doo, M. Y., Bonk, C., and Heo, H. (2020). A meta-analysis of scaffolding effects in online learning in higher education. Int. Rev. Res. Open Distrib. Learn. 21, 60–80. doi: 10.19173/irrodl.v21i3.4638

Doo, M. Y., Bonk, C. J., and Kim, J. (2021). An investigation of under-represented MOOC populations: motivation, self-regulation and grit among 2-year college students in Korea. J. Comput. High. Educ. 33, 1–22. doi: 10.1007/s12528-021-09270-6

Ertmer, P. A., Ottenbreit-Leftwich, A. T., Sadik, O., Sendurur, E., and Sendurur, P. (2012). Teacher beliefs and technology integration practices: a critical relationship. Comput. Educ. 59, 423–435.

Fernández-Batanero, J. M., Román-Graván, P., Reyes-Rebollo, M. M., and Montenegro-Rueda, M. (2021). Impact of educational technology on teacher stress and anxiety: a literature review. Int. J. Environ. Res. Public Health 18:548. doi: 10.3390/ijerph18020548

Froemming, C., and Cifuentes, L. (2020). “Professional Development for Technology Integration in the Early Elementary Grades,” in Society for Information Technology & Teacher Education International Conference, (Waynesville: Association for the Advancement of Computing in Education (AACE)), 444–450.

Gilakjani, A. P., Leong, L. M., and Ismail, H. N. (2013). Teachers’ Use of Technology and Constructivism. Int. J. Mod. Educ. Comput. Sci. 5, 49–63. doi: 10.5815/ijmecs.2013.04.07

González-Gómez, D., and Jeong, J. S. (2019). EdusciFIT: a computer-based blended and scaffolding toolbox to support numerical concepts for flipped science education. Educ. Sci. 9:116. doi: 10.3390/educsci9020116

Grogan, K. E. (2021). Writing Science: what Makes Scientific Writing Hard and How to Make It Easier. Bull. Ecol. Soc. Am. 102:e01800. doi: 10.1002/bes2.1800

Hannafin, M., Land, S., and Oliver, K. (1999). “Open-ended learning environments: foundations, methods, and models,” in Instructional-Design Theories and Models: volume II: A New Paradigm of Instructional Theory, ed. C. M. Reigeluth (Mahwah: Lawrence Erlbaum Associates), 115–140.

Hao, Y., and Lee, K. S. (2015). Teachers’ concern about integrating Web 2.0 technologies and its relationship with teacher characteristics. Comput. Hum. Behav. 48, 1–8. doi: 10.1016/j.chb.2015.01.028

Hébert, C., Jenson, J., and Terzopoulos, T. (2021). “Access to technology is the major challenge”: teacher perspectives on barriers to DGBL in K-12 classrooms. E-Learn. Digital Media 18, 307–324. doi: 10.1177/2042753021995315

Heffernan, N. T., and Heffernan, C. L. (2014). The ASSISTments ecosystem: building a platform that brings scientists and teachers together for minimally invasive research on human learning and teaching. Int. J. Artif. Intell. Educ. 24, 470–497. doi: 10.1007/s40593-014-0024-x

Hmelo-Silver, C. E., Duncan, R. G., and Chinn, C. A. (2007). Scaffolding and achievement in problem-based and inquiry learning: a response to Kirschner, Sweller, and Clark (2006). Educ. Psychol. 42, 99–107. doi: 10.1080/00461520701263368

Holmes, W., Bialik, M., and Fadel, C. (2019). Artificial intelligence in Education. Boston: Center for Curriculum Redesign. doi: 10.1007/978-3-319-60013-0_107-1

Hrastinski, S., Olofsson, A. D., Arkenback, C., Ekström, S., Ericsson, E., Fransson, G., et al. (2019). Critical imaginaries and reflections on artificial intelligence and robots in postdigital K-12 education. Postdigit. Sci. Educ. 1, 427–445. doi: 10.1007/s42438-019-00046-x

Hwang, G. J., and Tu, Y. F. (2021). Roles and research trends of artificial intelligence in mathematics education: a bibliometric mapping analysis and systematic review. Mathematics 9:584. doi: 10.3390/math9060584

Istenic, A., Bratko, I., and Rosanda, V. (2021). Pre-service teachers’ concerns about social robots in the classroom: a model for development. Educ. Self Dev. 16, 60–87. doi: 10.26907/esd.16.2.05

Jeon, A. J., Kellogg, D., Khan, M. A., and Tucker-Kellogg, G. (2021). Developing critical thinking in STEM education through inquiry-based writing in the laboratory classroom. Biochem. Mol. Biol. Educ. 49, 140–150. doi: 10.1002/bmb.21414

Jia, J., He, Y., and Le, H. (2020). “A multimodal human-computer interaction system and its application in smart learning environments,” in International Conference on Blended Learning, (Cham: Springer), 3–14. doi: 10.1007/978-3-030-51968-1_1

Kaban, A. L., and Ergul, I. B. (2020). “Teachers’ attitudes towards the use of tablets in six EFL classrooms,” in Examining the Roles of Teachers and Students in Mastering New Technologies, ed. P. Eva (Pennsylvania: IGI Global), 284–298. doi: 10.4018/978-1-7998-2104-5.ch015

Kim, J., Merrill, K., Xu, K., and Sellnow, D. D. (2020). My teacher is a machine: understanding students’ perceptions of AI teaching assistants in online education. Int. J. Hum. Comput. Interact. 36, 1902–1911. doi: 10.1080/10447318.2020.1801227

Kim, N. J., Belland, B. R., Lefler, M., Andreasen, L., Walker, A., and Axelrod, D. (2020). Computer-based scaffolding targeting individual versus groups in problem-centered instruction for STEM education: meta-analysis. Educ. Psychol. Rev. 32, 415–461. doi: 10.1007/s10648-019-09502-3

Kim, N. J., Belland, B. R., and Walker, A. E. (2018). Effectiveness of computer-based scaffolding in the context of problem-based learning for STEM education: bayesian meta-analysis. Educ. Psychol. Rev. 30, 397–429. doi: 10.1007/s10648-017-9419-1

Kim, N. J., Vicentini, C. R., and Belland, B. R. (2021). Influence of scaffolding on information literacy and argumentation skills in virtual field trips and problem-based learning for scientific problem solving. Int. J. Sci. Math. Educ. 20, 215–236. doi: 10.1007/s10763-020-10145-y

Koedinger, K. R., Anderson, J. R., Hadley, W. H., and Mark, M. A. (1997). Intelligent tutoring goes to school in the big city. Int. J. Artif. Intell. Educ. 8, 30–43.

Krippendorff, K. (2004). Reliability in content analysis: some common misconceptions and recommendations. Hum. Commun. Res. 30, 411–433. doi: 10.1111/j.1468-2958.2004.tb00738.x

Laksana, F. S. W., and Fiangga, S. (2022). The development of web-based chatbot as a mathematics learning media on system of linear equations in three variables. Jurnal Ilmiah Pendidikan Matematika 11, 145–154.

Latifi, S., Noroozi, O., and Talaee, E. (2020). Worked example or scripting? Fostering students’ online argumentative peer feedback, essay writing and learning. Interact. Learn. Environ. 1–15. doi: 10.1080/10494820.2020.1799032

Lin, C. H., Yu, C. C., Shih, P. K., and Wu, L. Y. (2021). STEM based artificial intelligence learning in general education for non-engineering undergraduate students. Educ. Technol. Soc. 24, 224–237.

Lindsay, D. (2020). Scientific writing= thinking in words. Clayton: Csiro Publishing. doi: 10.1071/9781486311484

Luckin, R., Holmes, W., Griffiths, M., and Forcier, L. B. (2016). Intelligence unleashed: An argument for AI in education. London: Pearson Education.

Maderer, J. (2017). Jill Watson, Round Three, Georgia Tech course prepares for third semester with virtual teaching assistants. Atlanta: Georgia Tech News Center.

McFarland, M. (2016). What Happened When A Professor Built a Chatbot to be his Teaching Assistant?. Washington: Washington Post.

Mercader, C., and Gairín, J. (2020). University teachers’ perception of barriers to the use of digital technologies: the importance of the academic discipline. Int. J. Educ. Technol. High. Educ. 17:4. doi: 10.1186/s41239-020-0182-x

Metz, C., and Satariano, A. (2018). Silicon Valley’s Giants Take Their Talent Hunt to Cambridge. New York: The New York Times. Retrieved from https://www.nytimes.com/2018/07/03/technology/cambridge-artificial-intelligence.html

Mitrović, A. (1998). “Experiences in implementing constraint-based modeling in SQL-Tutor,” in International Conference on Intelligent Tutoring Systems, (Berlin: Springer), 414–423. doi: 10.1007/3-540-68716-5_47

Moon, A., Gere, A. R., and Shultz, G. V. (2018). Writing in the STEM classroom: faculty conceptions of writing and its role in the undergraduate classroom. Sci. Educ. 102, 1007–1028. doi: 10.1002/sce.21454

Pane, J. F., Griffin, B. A., McCaffrey, D. F., and Karam, R. (2014). Effectiveness of cognitive tutor algebra I at Scale. Educ. Eval. Policy Anal. 36, 127–144. doi: 10.3102/0162373713507480

Panigrahi, C. M. A. (2020). Use of artificial intelligence in education. Manage. Account. 55, 64–67. doi: 10.1371/journal.pone.0229596

Paviotti, G., Rossi, P. G., and Zarka, D. (2013). Intelligent Tutoring Systems: An Overview. Lecce: Pensa Multimedia.

Petersen, N., and Batchelor, J. (2019). Preservice student views of teacher judgement and practice in the age of artificial intelligence. South. Afr. Rev. Educ. Educ. Prod. 25, 70–88.

Prensky, M. (2008). Backup Education? Too many teachers see education as preparing kids for the past, not the future. Educ. Technol. 48, 1–3.

Proske, A., Narciss, S., and McNamara, D. S. (2012). Computer-based scaffolding to facilitate students’ development of expertise in academic writing. J. Res. Read. 35, 136–152. doi: 10.1111/j.1467-9817.2010.01450.x

Puri, N., and Mishra, G. (2020). “Artificial Intelligence (AI) in Classrooms: The Need of the Hour,” in Transforming Management Using Artificial Intelligence Techniques, eds V. Garg and R. Agrawal (Boca Raton: CRC Press), 169–183. doi: 10.1201/9781003032410-12

Qin, F., Li, K., and Yan, J. (2020). Understanding user trust in artificial intelligence-based educational systems: evidence from China. Br. J. Educ. Technol. 51, 1693–1710. doi: 10.1111/bjet.12994

Radford, A., Wu, J., Child, R., Luan, D., Amodei, D., and Sutskever, I. (2019). Language Models are Unsupervised Multitask Learners. San Francisco: OpenAI blog, 1.

Reiser, B. J. (2004). Scaffolding complex learning: the mechanisms of structuring and problematizing student work. J. Learn. sci. 13, 273–304. doi: 10.1207/s15327809jls1303_2

Roll, I., and Wylie, R. (2016). Evolution and revolution in artificial intelligence in education. Int. J. Artif. Intell. Educ. 26, 582–599. doi: 10.1007/s40593-016-0110-3

Ryu, M., and Han, S. (2018). The educational perception on artificial intelligence by elementary school teachers. J. Korean Assoc. Inform. Educ. 22, 317–324. doi: 10.1016/j.ridd.2019.01.002

Sakulkueakulsuk, B., Witoon, S., Ngarmkajornwiwat, P., Pataranutaporn, P., Surareungchai, W., Pataranutaporn, P., et al. (2018). “Kids making AI: Integrating machine learning, gamification, and social context in STEM education,” in 2018 IEEE international conference on teaching, assessment, and learning for engineering (TALE), (Wollongong: IEEE), 1005–1010. doi: 10.1109/TALE.2018.8615249

Sánchez-Prieto, J. C., Cruz-Benito, J., Therón Sánchez, R., and García Peñalvo, F. J. (2020). Assessed by Machines: development of a TAM-Based Tool to Measure AI-based Assessment Acceptance Among Students. Int. J. Interact. Multi. Artif. Intell. 6:80. doi: 10.9781/ijimai.2020.11.009

Saputri, A. A. (2021). Student science process skills through the application of computer based scaffolding assisted by PhET simulation. At-Taqaddum 13, 21–38. doi: 10.21580/at.v13i1.8151

Semerci, A., and Aydin, M. K. (2018). Examining high school teachers’ attitudes towards ICT use in education. Int. J. Progress. Educ. 14, 93–105. doi: 10.29329/ijpe.2018.139.7

Spain, R., Rowe, J., Smith, A., Goldberg, B., Pokorny, R., Mott, B., et al. (2021). A reinforcement learning approach to adaptive remediation in online training. J. Def. Model. Simul. doi: 10.1177/15485129211028317

Sperling, A., and Lickerman, D. (2012). “Integrating AI and machine learning in software engineering course for high school students,” in Proceedings of the 17th ACM annual conference on Innovation and technology in computer science education, (New York: Association for Computing Machinery), 244–249. doi: 10.1145/2325296.2325354

Supriyadi, S. (2021). Evaluation instrument development for scientific writing instruction with a constructivism approach. Tech. Soc. Sci. J. 21, 345–363. doi: 10.47577/tssj.v21i1.3877

Tallvid, M. (2016). Understanding teachers’ reluctance to the pedagogical use of ICT in the 1: 1 classroom. Educ. Inf. Technol. 21, 503–519. doi: 10.1007/s10639-014-9335-7

Tan, S. C. (2000). Supporting Collaborative Problem Solving Through Computer-Supported Collaborative Argumentation. Unpublished Doctoral Dissertation. University Park, PA: The Pennsylvania State University.

Topal, A. D., Eren, C. D., and Geçer, A. K. (2021). Chatbot application in a 5th grade science course. Educ. Inf. Technol. 26, 6241–6265. doi: 10.1007/s10639-021-10627-8

Toth, E. E., Suthers, D. D., and Lesgold, A. M. (2002). “Mapping to know”: the effects of representational guidance and reflective assessment on scientific inquiry. Sci. Educ. 86, 264–286. doi: 10.1002/sce.10004

Trujillo-Torres, J. M., Hossein-Mohand, H., Gómez-García, M., Hossein-Mohand, H., and Cáceres-Reche, M. P. (2020). Mathematics teachers’ perceptions of the introduction of ICT: the relationship between motivation and use in the teaching function. Mathematics 8:2158. doi: 10.3390/math8122158

Tshuma, N. (2021). The vulnerable insider: navigating power, positionality and being in educational technology research. Learn. Media Technol. 46, 218–229. doi: 10.1080/17439884.2021.1867572

Tucker, C., Jackson, K., and Park, J. J. (2020). “Exploring the future of engineering education: Perspectives from a workshop on artificial intelligence and the future of STEM and societies,” in ASEE Annual Conference and Exposition, Conference Proceedings, (Washington: American Society of Engineering Education).

Turing, A. (1950). Computing machinery and intelligence. Mind 59, 433–460. doi: 10.1093/mind/LIX.236.433

United Nations Educational, Scientific and Cultural Organization [UNESCO] (2019). The Challenge and Opportunities of Artificial Intelligence in Education. Paris: The United Nations Educational, Scientific and Cultural Organization.

Vachovsky, M. E., Wu, G., Chaturapruek, S., Russakovsky, O., Sommer, R., and Fei-Fei, L. (2016). “Toward more gender diversity in CS through an artificial intelligence summer program for high school girls,” in Proceedings of the 47th ACM Technical Symposium on Computing Science Education, (Memphis: Association for Computing Machinery), 303–308. doi: 10.1145/2839509.2844620

Walker, A. S. (2019). Perusall: harnessing AI robo-tools and writing analytics to improve student learning and increase instructor efficiency. J. Writ. Anal. 3, 227–263. doi: 10.37514/JWA-J.2019.3.1.11

Wiley, J., Goldman, S. R., Graesser, A. C., Sanchez, C. A., Ash, I. K., and Hemmerich, J. A. (2009). Source evaluation, comprehension, and learning in Internet science inquiry tasks. Am. Educ. Res. J. 46, 1060–1106. doi: 10.3102/0002831209333183

Wood, D., Bruner, J. S., and Ross, G. (1976). The role of tutoring in problem solving. J. Child Psychol. Psychiatry 17, 89–100. doi: 10.1111/j.1469-7610.1976.tb00381.x

Wood, E., Mueller, J., Willoughby, T., Specht, J., and Deyoung, T. (2005). Teachers’ perceptions: barriers and supports to using technology in the classroom. Educ. Commun. Inf. 5, 183–206. doi: 10.1080/14636310500186214

Yang, S. J. (2021). Guest Editorial: precision Education-A New Challenge for AI in Education. J. Educ. Technol. Soc. 24, 105–108.

Yurtseven Avci, Z., O’Dwyer, L. M., and Lawson, J. (2020). Designing effective professional development for technology integration in schools. J. Comput. Assist. Learn. 36, 160–177. doi: 10.1111/jcal.12394

Zhai, X., Haudek, K. C., Shi, L., Nehm, R. H., and Urban-Lurain, M. (2020). From substitution to redefinition: a framework of machine learning-based science assessment. J. Res. Sci. Teach. 57, 1430–1459. doi: 10.1002/tea.21658

Keywords: artificial intelligence, learning support, scaffolding, scientific writing, teacher’s perception

Citation: Kim NJ and Kim MK (2022) Teacher’s Perceptions of Using an Artificial Intelligence-Based Educational Tool for Scientific Writing. Front. Educ. 7:755914. doi: 10.3389/feduc.2022.755914

Received: 09 August 2021; Accepted: 22 February 2022;

Published: 29 March 2022.

Edited by:

Maria Meletiou-Mavrotheris, European University Cyprus, CyprusReviewed by:

Piedade Vaz-Rebelo, University of Coimbra, PortugalDionysia Bakogianni, National and Kapodistrian University of Athens, Greece

Copyright © 2022 Kim and Kim. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nam Ju Kim, namju.kim@miami.edu

Nam Ju Kim

Nam Ju Kim Min Kyu Kim

Min Kyu Kim